| Backlink to the CGSE Documentation Website | |

|---|---|

Developer Manual (this document) |

|

Changelog

- 08/10/2024 — v1.12

-

-

Added a section on the data dumper, see Section 5.4

-

- 06/09/2024 — v1.11

-

-

Filled the section on writing docstrings, see Chapter 4.

-

- 06/03/2024 — v1.10

-

-

Added a story about finding a left-open socket… see Chapter 28.

-

- 27/02/2024 — v1.9

-

-

Added part on Essential Toolkit and Infratsructure Elements, see Chapter 17 and Chapter 18.

-

Added some more info on Setup in Chapter 8.

-

- 12/02/2024 — v1.8

-

-

Started a chapter on SpaceWire communication, see Chapter 15.

-

Added a chapter on the notification system for control servers, see Chapter 7.

-

- 18/06/2023 — v1.7

-

-

Added a section on setting up a development environment to contribute to the CGSE and/or TS, see Chapter 26.

-

added a backlink to the CGSE Documentation web site for your convenience. It’s at the top of the HTML page.

-

- 04/05/2023 — v1.6

-

-

Added explanation about data distribution and monitoring message queues used by the DPU Processor to publish information and data, see Chapter 14.

-

Added some more meat into the description of the arguments for the

@dynamic_commanddecorator, see Section 6.6.2. -

Added an explanation of the communication with and commanding of the N-FEE in the inner loop of the DPUProcessor, see Section 14.1.1.

-

- 03/04/2023 — v1.5

-

-

Added a section 'Services' in the Commanding Concepts section, see Section 6.4.

-

- 12/03/2023 — v1.4

-

-

Added description of the arguments that can be used with the

@dynamic_commanddecorator, see Section 6.6.2. -

Added a section on creating multiple control servers for identical devices, see Section 6.1.1.

-

- 09/02/2023 — v1.3

-

-

Updated section on version and release numbers, see Chapter 2.

-

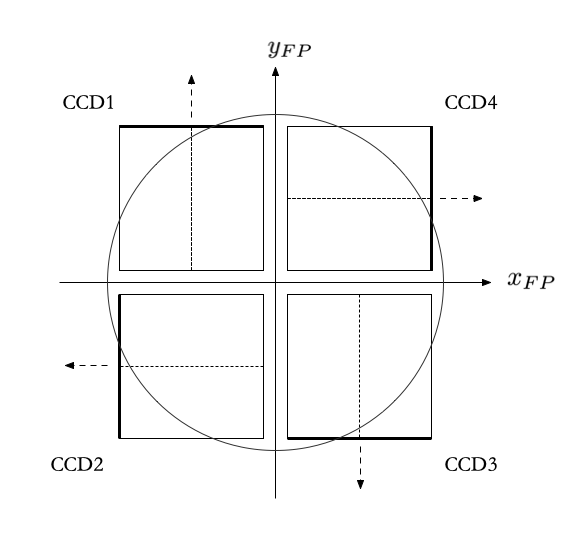

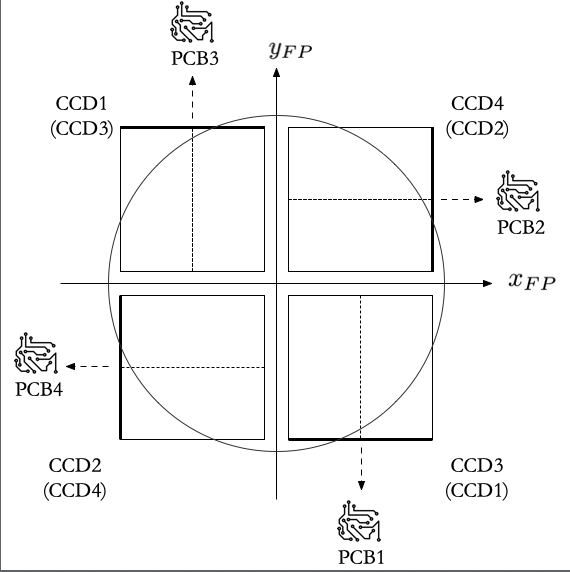

Fixed some formatting issues in the CCD numbering section.

-

Colophon

Copyright © 2022, 2023 by the KU Leuven PLATO CGSE Team

1st Edition — February 2023

This manual is written in PyCharm using the AsciiDoc plugin. The PDF Book version is processed with asciidoctor-pdf.

The manual is available as HTML from ivs-kuleuven/github.io. The HTML pages are generated with Hugo which is an OSS static web-pages generator. From this site, you can also download the PDF books.

The source code is available in a GitHub repository at ivs-kuleuven/plato-cgse-doc.

When you find an error or inconsistency or you have some improvements to the text, feel free to raise an issue or create a pull request. Any contribution is greatly appreciated and will be mentioned in the acknowledgement section.

Conventions used in this Book

We try to be consistent with the following typographical conventions:

- Italic

-

Indicates a new term or …

- Constant width

-

Used for code listings, as well as within paragraphs to refer to program elements like variable and function names, data type, environment variables (

ALL_CAPS), statements and keywords. - Constant width between angle brackets

<text> -

Indicates

textthat should be replaced with user-supplied values or by values determined by context. The brackets should thereby be omitted.

When you see a $ … in code listings, this is a command you need to execute in a terminal (omitting the dollar sign itself). When you see >>> … in code listings, that is a Python expression that you need to execute in a Python REPL (here omitting the three brackets).

- Setup versus setup

-

I make a distinction between Setup (with a capital S) and setup (with a small s). The Setup is used when I talk about the object as defined in a Python environment, i.e. the entity itself that contains all the definitions, configuration and calibration parameters of the equipment that make up the complete test setup (notice the small letter 's' here).

(sometimes you may find setup in the document which really should be 'set up' with a space)

- Using TABs

-

Some of the manuals use TABs in their HTML version. Below, you can find an example of tabbed information. You can select between FM and EM info and you should see the text change with the TAB.

| This feature is only available in the HTML version of the documents. If you are looking at the PDF version of the document, the TABs are shown in a frame where all TABs are presented successively. |

-

FM

-

EM

In this TAB we present FM specific information.

In this TAB we present EM specific information.

- Using Collapse

-

Sometimes, information we need to display is too long and will make the document hard to read. This happens mostly with listings or terminal output and we will make that information collapsible. By default, the info will be collapsed, press the small triangle before the title (or the title itself) to expand it.

| In the PDF document, all collapsible sections will be expanded. |

A collapsible listing

plato-data@strawberry:/data/CSL1/obs/01151_CSL1_chimay$ ls -l total 815628 -rw-r--r-- 1 plato-data plato-data 7961 Jun 20 10:38 01151_CSL1_chimay_AEU-AWG1_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 9306 Jun 20 10:38 01151_CSL1_chimay_AEU-AWG2_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 309375 Jun 20 10:38 01151_CSL1_chimay_AEU-CRIO_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 42950 Jun 20 10:38 01151_CSL1_chimay_AEU-PSU1_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 43239 Jun 20 10:38 01151_CSL1_chimay_AEU-PSU2_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 42175 Jun 20 10:38 01151_CSL1_chimay_AEU-PSU3_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 42327 Jun 20 10:38 01151_CSL1_chimay_AEU-PSU4_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 42242 Jun 20 10:38 01151_CSL1_chimay_AEU-PSU5_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 42269 Jun 20 10:38 01151_CSL1_chimay_AEU-PSU6_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 67149 Jun 20 10:38 01151_CSL1_chimay_CM_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 20051 Jun 20 10:38 01151_CSL1_chimay_DAQ6510_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 105 Jun 20 10:38 01151_CSL1_chimay_DAS-DAQ6510_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 19721 Jun 20 10:38 01151_CSL1_chimay_DPU_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 22833 Jun 20 10:38 01151_CSL1_chimay_FOV_20230620_095819.csv -rw-rw-r-- 1 plato-data plato-data 833754240 Jun 20 10:34 01151_CSL1_chimay_N-FEE_CCD_00001_20230620_cube.fits -rw-r--r-- 1 plato-data plato-data 292859 Jun 20 10:38 01151_CSL1_chimay_N-FEE-HK_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 8877 Jun 20 10:38 01151_CSL1_chimay_OGSE_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 19841 Jun 20 10:38 01151_CSL1_chimay_PM_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 188419 Jun 20 10:38 01151_CSL1_chimay_PUNA_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 7662 Jun 20 10:38 01151_CSL1_chimay_SMC9300_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 19781 Jun 20 10:38 01151_CSL1_chimay_SYN_20230620_095819.csv -rw-r--r-- 1 plato-data plato-data 147569 Jun 20 10:38 01151_CSL1_chimay_SYN-HK_20230620_095819.csv plato-data@strawberry:/data/CSL1/obs/01151_CSL1_chimay$

Contributors

| Name | Affiliation | Role |

|---|---|---|

Rik Huygen |

KU Leuven |

Software architect |

Sara Regibo |

KU Leuven |

Senior software engineer and EGSE expert |

Pierre Royer |

KU Leuven |

Instrument expert |

Sena Gomashie |

SRON |

EGSE expert at test house |

Jens Johansen |

SRON |

EGSE expert at test house |

Nicolas Beraud |

IAS |

EGSE expert at test house |

Pierre Guiot |

IAS |

EGSE expert at test house |

Angel Valverde |

INTA |

EGSE expert at test house |

Jesus Saiz |

INTA |

EGSE expert at test house |

Preface

During the development/implementation process it became clear that the project was bigger then we anticipated and we would need to document how the Common-EGSE developed into this full-blown product.

During the analysis, design and implementation phase we learned about so many libraries like ZeroMQ, Matplotlib, Numpy, Pandas, Rich, Textual, … but also the standard Python library is so extremely rich of well-designed modules and language concepts that we integrated into our design and implementation.

Many of the applied concepts might seem obvious for the core developers of a/this product, but it might not be for the contributors of e.g. device drivers. A full in-depth description of the system and how it was developed is therefore indispensable.

The text itself gruw over the years, it started in reStructuredText and was processed with Sphinx, then we moved to Markdown and Mkdocs because of its simplicity and thinking this would encourage us to write and update the text more often. Finally, we ended up using Asciidoc which is a bit of a compromise between the previous two.

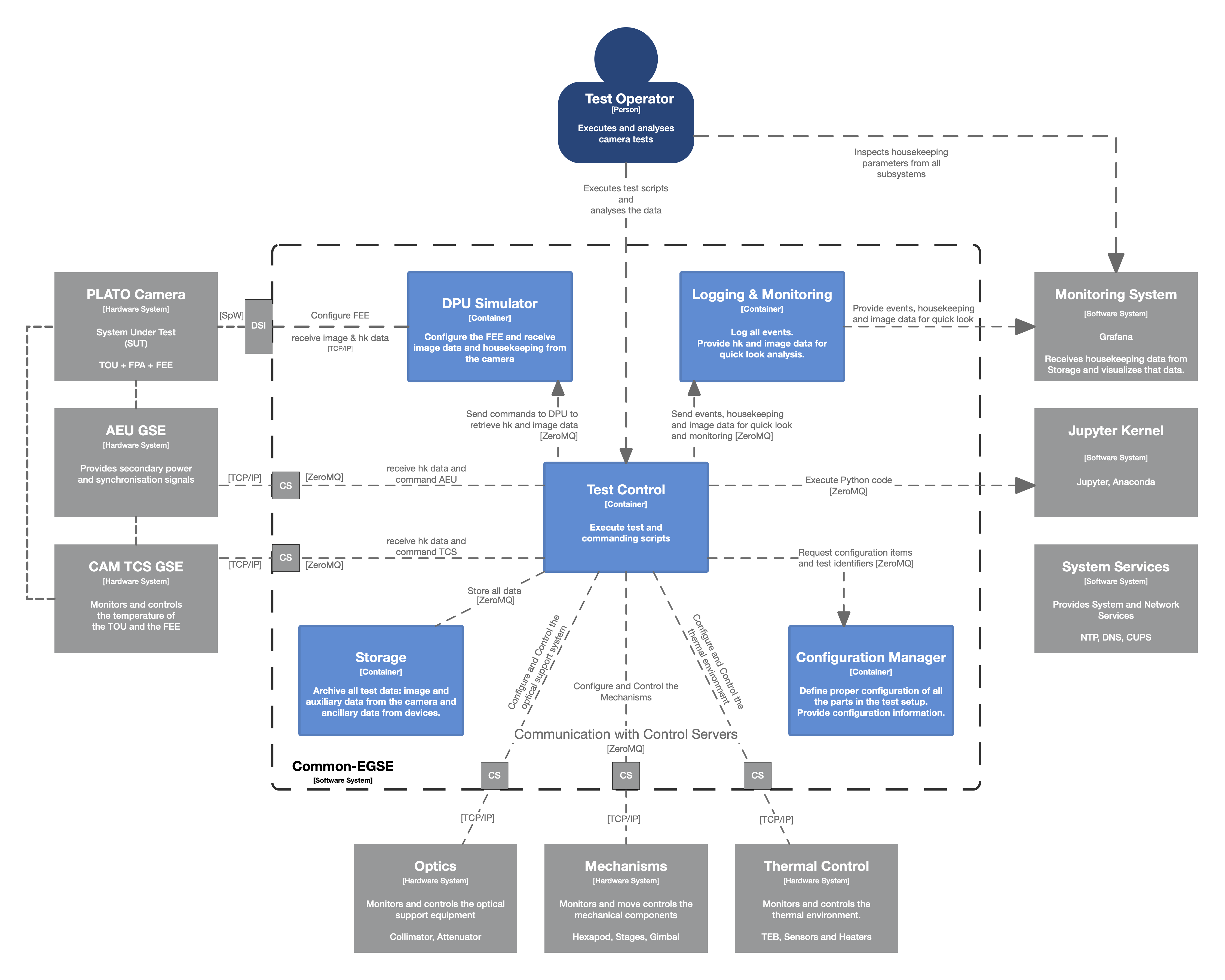

Introduction

This document is the developer guide for the PLATO Common-EGSE, used at CSL (Liège, Belgium) and the test houses at IAS (Paris, France), INTA (Madrid, Spain) and SRON (Groningen, The Netherlands) and describes the Common-EGSE system from the developer’s point of view. This document explains how to contribute to the code, which versions of libraries that are used, compiler options for device libraries written in C, access to the GitHub repo, coding style,and much more.

The current release contains 303 Python files, together they contain 95389 lines of which there are 49360 lines of Python code. About 50% of the code files contain actual code, the rest is documentation, comments and blank lines. It is impossible for one person to fully understand all details of the system and only for that reason is it essential to have good and comprehensive documentation.

Please, read the installation instructions to know how to install the software and configure your Python installation.

List of TODO topics

-

List the terminal commands in a simple overview table or something

-

Explain System Preferences in the settings.yaml file (and the local settings)

-

The Logger uses ZeroMQ to pass messages. That means these this ZeroMQ needs to be closed when your application ends.

-

Where do we descibe the Synoptics Manager, where does this process fit into the design fiures etc.

-

Somewhere we need to describe all the modules in the CGSE. For example, the

device.pymodule defines a lot of interesting classes, including Exceptions, the device connection interfaces, and the device transport. -

Somewhere it shall be clearly explained where all these processes need to be started, on the egse-server or egse-client, manually or by Systemd, … or clicking an icon?

-

Should we maybe divide the developer manual in part 1 with coding advice and part 2 with the description of the CGSE code?

-

Explain how the Failure — Success — Response classes work

-

Explain how you can run the GUIs and even some control servers on your local machine using port forwarding. As an example, use the TCS or the Hexapod. This is only for hardware device connections, it is not for core services, run them as normal on your local machine.

-

Find better names for the Parts in the manual

-

WHere do we discuss devices with Ethernet interfaces and USB interfaces, the differences, the pros and cons, are there other still in use, like GPIO?

-

Describe how to detect which process is holding a connection on Linux and macOS. This happens sometimes when a process crashes or when the developer did not properly close all connection, or when a process is hanging. You will get a

OSError: [Errno 48] Address already in use. -

Describe the RegisterMap of the N-FEE and how and when it is synced with the DPU Processor. What is the equivalent of the FPGA (loading the Registers on every long pulse) in the DPU Processor (DPU Internals vs NFEEState).

-

Describe what the num_cycles parameter is and how this is used in the DPU Processor. That this parameter can be negative and what this means and why this decision (to not have too many if statements in the run() of the DPU Processor.)

-

Describe that the DPU Processor is time critical

-

Describe why certain control servers start a sub-process → TCS, DPU, …

-

Describe how to switch your development environment from one TH to another, e.g. CSL1 → CSL2. Which environment variables need to be adapted, which directories need to exist etc.

Documents and Acronyms

Applicable documents

| [AD-01] |

PLATO Common-EGSE Requirements Specification, PLATO-KUL-PL-RS-0001, issue 1.4, 18/03/2020 |

| [AD-02] |

PLATO Common-EGSE Design Description, PLATO-KUL-PL-DD-0001, issue 1.2, 13/03/2020 |

| [AD-03] |

PLATO Common-EGSE Interface Control Document, PLATO-KUL-PL-ICD-0002, issue 0.1, 19/03/2020 |

Reference Documents

| [RD-01] |

PLATO Common-EGSE Installation Guide, PLATO-KUL-PL-MAN-0002 |

| [RD-02] |

PLATO Common-EGSE User Manual, PLATO-KUL-PL-MAN-0001 |

| [RD-03] | |

| [RD-04] |

Acronyms

AEU |

Ancillary Electronics Unit |

API |

Application Programming Interface |

CAM |

Camera |

CCD |

Charged-Coupled Device |

CGSE |

Common-EGSE |

CSL |

Centre Spatial de Liège |

CSV |

Comma-Separated Values |

COT |

Commercial off-the-shelf |

CTI |

Charge Transfer Inefficiency |

CTS |

Consent to Ship |

DPU |

Data Processing Unit |

DSI |

Diagnostic SpaceWire Interface |

EGSE |

Electrical Ground Support Equipment |

EOL |

End Of Life |

FAQ |

Frequently Asked Questions |

FEE |

Front End Electronics |

FITS |

Flexible Image Transport System |

FPA |

Focal Plane Assembly/Array |

GSE |

Ground Support Equipment |

GUI |

Graphical User Interface |

HDF5 |

Hierarchical Data Format version 5 (File format) |

HK |

Housekeeping |

IAS |

Institut d’Astrophysique Spatiale |

ICD |

Interface Control Document |

LDO |

Leonardo space, Italy |

MGSE |

Mechanical Ground Support Equipment |

MMI |

Man-Machine Interface |

NCR |

Non-Conformance Report |

NRB |

Non-Conformance Review Board |

OBSID |

Observation Identifier |

OGSE |

Optical Ground Support Equipment |

OS |

Operating System |

Portable Document Format |

|

PID |

Process Identifier |

PLATO |

PLAnetary Transits and Oscillations of stars |

PPID |

Parent Process Identifier |

PLM |

Payload Module |

PVS |

Procedure Variation Sheet |

REPL |

Read-Evaluate-Print Loop, e.g. the Python interpreter prompt |

RMAP |

Remote Memory Access Protocol |

SFT |

Short Functional Test |

SpW |

SpaceWire |

SQL |

Structured Query Language |

SRON |

Stichting Ruimte-Onderzoek Nederland |

SUT |

System Under Test |

SVM |

Service Module |

TBC |

To Be Confirmed |

TBD |

To Be Decided or To Be Defined |

TBW |

To Be Written |

TC |

Telecommand |

TCS |

Thermal Control System |

TH |

Test House |

TM |

Telemetry |

TOU |

Telescope Optical Unit |

TS |

Test Scripts |

TUI |

Text-based User Interface |

TV |

Thermal Vacuum |

UM |

User Manual |

USB |

Universal Serial Bus |

YAML |

YAML Ain’t Markup Language |

Caveats

Place general warnings here on topics where the user might make specific assumption on the code or the usage of the code.

Setup

-

It’s not a good idea to create keys with spaces and special characters, although it is allowed in a dictionary and it works without problems, the key will not be available as an attribute because it will violate the Python syntax.

>>> from egse.setup import Setup >>> s = Setup() >>> s["a key with spaces"] = 42 >>> print(s) NavigableDict └── a key with spaces: 42 >>> s['a key with spaces'] 42 >>> s.a key with spaces Input In [18] s.a key with spaces ^ SyntaxError: invalid syntax -

When submitting a Setup to the configuration manager, the Setup is automatically pushed to the GitHub repository with the message provided with the

submit_setup()function. No need anymore to create a pull request. There are however two thing to keep in mind here:-

do not add a Setup manually to your PLATO_CONF_FILE_LOCATION or to the repository. That will invalidate the repo and the cache that is maintained by the configuration manager

-

you should do a git pull regularly on your local machine and also on the egse-client if the folder is not NFS mounted

-

Part I — Development Notions

1. Style Guide

This part of the developer guide contains instructions for coding styles that are adopted for this project.

The style guide that we use for this project is PEP8. This is the standard for Python code and all IDEs, parsers and code formatters understand and work with this standard. PEP8 leaves room for project specific styles. A good style guide that we can follow is the Google Style Guide.

The following sections will give the most used conventions with a few examples of good and bad.

1.1. TL;DR

| Type | Style | Example |

|---|---|---|

Classes |

CapWords |

ProcessManager, ImageViewer, CommandList, Observation, MetaData |

Methods & Functions |

lowercase with underscores |

get_value, set_mask, create_image |

Variables |

lowercase with underscores |

key, last_value, model, index, user_info |

Constants |

UPPERCASE with underscores |

MAX_LINES, BLACK, COMMANDING_PORT |

Modules & packages |

lowercase no underscores |

dataset, commanding, multiprocessing |

1.2. General

-

name the class or variable or function with what it is, what it does or what it contains. A variable named

user_listmight look good at first, but what if at some point you want to change the list to a set so it can not contain duplicates. Are you going to rename everything intouser_setor woulduser_infobe a better name? -

never use dashes in any name, they will raise a

SyntaxError: invalid syntax. -

we introduce a number of relaxations to not brake backward compatibility for the sake of a naming convention. As described in A Foolish Consistency is the Hobgoblin of Little Minds: Consistency with this style guide is important. Consistency within a project is more important. Consistency within one module or function is the most important. […] do not break backwards compatibility just to comply with this PEP!

1.3. Classes

Always use CamelCase (Python uses CapWords) for class names. When using acronyms, keep them all UPPER case.

-

class names should be nouns, like Observation

-

make sure to name classes distinctively

-

stick to one word for a concept when naming classes, i.e. words like

ManagerorControllerorOrganizerall mean similar things. Choose one word for the concept and stick to it. -

if a word is already part of a package or module, don’t use the same word in the class name again.

Good names are: Observation, CalibrationFile, MetaData, Message, ReferenceFrame, URLParser.

1.4. Methods and Functions

A function or a method does something (and should only do one thing, SRP=Single Responsibility Principle), it is an action, so the name should reflect that action.

Always use lowercase words separated with underscores.

Good names are: get_time_in_ms(), get_commanding_port(), is_connected(), parse_time(), setup_mask().

When working with legacy code or code from another project, names may be in camelCase (with the first letter a lower case letter). So we can in this case use also getCommandPort() or isConnected() as method and function names.

1.5. Variables

Use the same naming convention as functions and methods, i.e. lowercase with underscores.

Good names are: key, value, user_info, model, last_value

Bad names: NSegments, outNoise

Take care not to use builtins: list, type, filter, lambda, map, dict, …

Private variables (for classes) start with an underscore: _name or _total_n_args.

In the same spirit as method and function names, the variables can also be in camelCase for specific cases.

1.6. CONSTANTS

Use ALL_UPPER_CASE with underscores for constants. Use constants always within a name space, not globally.

Good names: MAX_LINES, BLACK, YELLOW, ESL_LINK_MODE_DISABLED

1.7. Modules and Packages

Use simple words for modules, preferably just one word like datasets or commanding or storage or extensions. If two words are unavoidable, just concatenate them, like multiprocessing or sampledata or testdata. If needed for readability, use an underscore to separate the words, e.g. image_analysis.

1.8. Import Statements

-

group and sort import statements

-

never use the form

from <module> import * -

always use absolute imports in scripts

Be careful that you do not name any modules the same as a module in the Python standard library. This can result in strange effects and may result in an AttributeError. Suppose you have named a module math in the egse directory and it is imported and used further in the code as follows:

from egse import math

...

# in some expression further down the code you might use

math.exp(a)This will result in the following runtime error:

File "some_module.py", line 8, in <module>

print(math.exp(a))

AttributeError: module 'egse.math' has no attribute 'exp'Of course this is an obvious example, but it might be more obscure like e.g. in this GitHub issue: 'module' object has no attribute 'Cmd'.

2. Version numbers

The version of Python that you use to run the code shall be \$>=\$ 3.8. You can determine the version of Python with the following terminal command:

$ python3 -V Python 3.8.3

The version of the Common-EGSE (CGSE) and the test scripts (TS) that are installed on your system can be determined as follows:

$ python3 -m egse.version CGSE version in Settings: 2023.6.0+CGSE CGSE installed version = 2023.6.0+cgse $ python3 -m camtest.version CAMTEST version in Settings: 2023.6.0+TS CAMTEST git version = 2023.6.0+TS-0-g975d38e

The version numbers that are shown above have different parts that all have their meaning. We use semantic versioning to define our version numbers. Let’s use the CGSE version [2023.6.0+CGSE] to explain each of the parts:

-

2023.6.0is the core version number withmajor.minor.patchnumbers. The major number is the year in which the software was released. The minor number is the week number in which the configuration control board (CCB) gathered to approve a new release. The patch is used for urgent bug fixes within the week between two CCBs. -

For release candidates a postfix can be added like

2023.6.0-rc.1meaning that we are dealing with the first release candidate for the 2023.6.0 release. We should avoid using release candidates in the operational environment because these versions might not have been fully tested. In an official release of the software, this part in the versioning will be omitted. -

CGSEis a release for the Common-EGSE repository. The Test scripts will haveTShere. There is alsoCONFfor theplato-cgse-confrepository which contains all Setups and related files.

Then we have some additional information in the version number of the test scripts, in the above example [2023.6.0+TS-0-g975d38e].

-

0is the number of commits that have been pushed and merged to the GitHub repository since the creation of the release. This number shall be 0 at least for the CGSE on an operational system. If this number \$!=\$ 0, it means you have not installed the release properly as explained in the installation instructions and you are probably using a development version with less tested or even un-tested code. This number can differ from 0 for the test scripts as we do not yet have a proper installation procedure for the releases. -

g975d38eis the abbreviated git hash number[1] for this release. The first lettergindicates the use ofgit.

The VERSION and RELEASE are also defined in the settings.yaml and should match the first three parts of the version number explained above. If not, it was probably forgotten to update these numbers when preparing the release. You can use these version numbers in your code by importing from egse.version and camtest.version.

from egse.version import VERSION as CGSE_VERSION from camtest.version import VERSION as CAMTEST_VERSION

3. Best Practices for Error and Exception Handling

All errors and exceptions should be handled to prevent the Application from crashing. This is definitely true for server applications and services, e.g. device control servers, the storage and configuration manager. But also GUI applications should not crash due to an unhandled exception. It is important that, at least on the server side, all exceptions are logged.

3.1. When do I catch exceptions?

Exceptions shouldn’t really be caught unless you have a really good plan for how to recover. If you have no such plan, let the exception propagate to a higher level in your software until you know how to handle it. In your own code, try to avoid raising an exception in the first place, design your code and classes such that you minimise throwing exceptions.

If some code path simply must broadly catch all exceptions (i.e. catch the base Exception class) — for example, the top-level loop for some long-running persistent process — then each such caught exception must write the full stack trace to the log, along with a timestamp. Not just the exception type and message, but the full stack trace. This can easily be done in Python as follows:

try:

main_loop()

except Exception:

logging.exception("Caught exception at the top level main_loop().")This will log the exception and stacktrace with logging level ERROR.

For all other except clauses — which really should be the vast majority — the caught exception type must be as specific as possible. Something like KeyError, ConnectionTimeout, etc. That should be the exception that you have a plan for, that you can handle and recover from at this level in your code.

3.2. How do I catch Exceptions?

Use the try/except blocks around code that can potentially generate an exception and your code can recover from that exception.

Any resources such as open files or connections can be closed or cleaned up in the finally clause. Remember that the code within finally will always be executed, regardless of an Exception is thrown or not. In the example below, the function checks if there is internet connection by opening a socket to a host which is expected to be available at all times. The socket is closed in the finally clause even when the exception is raised.

import socket

import logging

def has_internet(host="8.8.8.8", port=53, timeout=3):

"""Returns True if we have internet connection, False otherwise."""

try:

socket_ = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

socket_.settimeout(timeout)

socket_.connect((host, port))

return True

except socket.error as ex:

logging.info(f"No Internet: Unable to open socket to {host}:{port} [{ex}]")

return False

finally:

if socket_ is not None:

socket_.close()3.3. What about the 'with' Statement?

When you use a with statement in your code, resources are automatically closed when an Exception is thrown, but the Exception is still thrown, so you should put the with block inside a try/except block.

As of Python 3 the socket class can be used as a context manager. The example above can thus be rewritten as follows:

import socket

import logging

def has_internet(host="8.8.8.8", port=53, timeout=3):

"""Returns True if we have internet connection, False otherwise."""

try:

with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as socket_:

socket_.settimeout(timeout)

socket_.connect((host, port))

return True

except socket.error as ex:

logging.warning(f"No Internet: Unable to open socket to {host}:{port} [{ex}]")

return FalseAnother example from our own code base shows how to handle a Hexapod PUNA Proxy error. Suppose you want to send some commands to the PUNA within a context manager as follows:

from egse.hexapod.symetrie.puna import PunaProxy

with PunaProxy() as proxy:

proxy.move_absolute(0, 0, 2, 0, 0, 0)

# Send other commands to the Puna Hexapod.When you execute the above code and the PUNA control server is not active, the Proxy will not be able to connect and the context manager will raise a ConnectionError.

PunaProxy could not connect to its control server at tcp://localhost:6700. No commands have been loaded.

Control Server seems to be off-line, abandoning

Traceback (most recent call last):

File "t.py", line 3, in <module>

with PunaProxy() as proxy:

File "/Users/rik/Git/plato-common-egse/src/egse/proxy.py", line 129, in __enter__

raise ConnectionError("Proxy is not connected when entering the context.")

ConnectionError: Proxy is not connected when entering the context.So, the with .. as statement should be put into a try .. except clause. Since we probably cannot handle this exception at this level, we only log the exception and re-raise the connection error.

from egse.hexapod.symetrie.puna import PunaProxy

try:

with PunaProxy() as proxy:

proxy.move_absolute(0,0,2,0,0,0)

# Send other commands to the Puna Hexapod.

except ConnectionError as exc:

logger.exception("Could not send commands to the Hexapod PUNA because the control server is not reachable.")

raise (1)| 1 | use a single raise statement, don’t repeat the ConnectionError here |

3.4. Why not just return an error code?

In languages like the C programming language is it custom to return error codes or -1 as a return code from a function to indicate a problem has occurred.

The main drawback here is that your code, when calling such a function, must always check it’s return codes, which is often forgotten or ignored.

In Python, throw exceptions instead of returning an error code. Exceptions ensure that failures do not go unnoticed because the calling code didn’t check a return value.

3.5. When to Test instead of Try?

The question basically is if we should check for a common condition without possibly throwing an exception. Python does this different than other languages and prefers the use of exceptions. There are two different opinions about this, EAFP and LBYL. From the Python documentation:

EAFP: it’s Easier to Ask for Forgiveness than Permission.

This common Python coding style assumes the existence of valid keys or attributes and catches exceptions if the assumption proves false. This clean and fast style is characterized by the presence of many try and except statements. The technique contrasts with the LBYL style common to many other languages such as C.

LBYL: Look Before You Leap.

This coding style explicitly tests for pre-conditions before making calls or lookups. This style contrasts with the EAFP approach and is characterized by the presence of many if statements. In a multi-threaded environment, the LBYL approach can risk introducing a race condition between “the looking” and “the leaping”.

Consider the following two cases:

if response[-2:] != '\r\n':

raise ConnectionError(f"Missing termination characters in response: {response}")def convert_to_float(value: str) -> float:

try:

return float(value)

except ValueError:

return math.nanIf you expect that 90% of the time your code will just run as expected, use the try/except approach. It will be faster if exceptions really are exceptional. If you expect an abnormal condition more than 50% of the time, then using if is probably better.

In other words, the method to choose depends on how often you expect the event to occur.

-

Use exception handling if the event doesn’t occur very often, that is, if the event is truly exceptional and indicates an error (such as an unexpected end-of-file). When you use exception handling, less code is executed in normal conditions.

-

Check for error conditions in code if the event happens routinely and could be considered part of normal execution. When you check for common error conditions, less code is executed because you avoid exceptions.

3.6. When to re-throw an Exception?

Sometimes you just want to do something and rethrow the same Exception. This is easy in Python as shown in the following example.

try:

# do some work here

except SomeException:

logging.warning("...", exc_info=True)

raise (1)| 1 | use only a raise statement, without the SomeException added. This will rethrow the exact same exception that was catched. |

In some cases, it is best to have the stacktrace printed out with the logging message. I’ve include the exc_info=True in the example.

3.7. What about Performance?

It is nearly free to set up a try/except block (an exception manager), while an if statement always costs you.

Bear in mind that Python internally uses exceptions frequently. So, when you use an if statement to check e.g. for the existence of an attribute (the hasattr() method), this builtin function will call getattr(obj, name) and catch AttributeError. So, instead of doing the following:

if hasattr(command, 'name'):

command_name = getattr(command, 'name')

else:

command_name = Noneyou can better use the try/except.

try:

command_name = getattr(command, 'name')

except AttributeError:

command_name = None3.8. Can I raise my own Exception?

As a general rule, try to use builtin exceptions from Python, especially ValueError, IndexError, NameError, and KeyError. Don’t invent your own 'parameter' or 'arguments' exceptions if the cause of the exception is clear from the builtin. The hierarchy of Exceptions can be found in the Python documentation at Builtin-Exceptions > Exception Hierarchy.

When the connection with a builtin exception is not clear however, create your own exception from the Exception class.

class DeviceNotFoundError(Exception):

"""Raised when a device could not be located or loaded."""

pass

Even if we are talking about Exceptions all the time, your own Exceptions should end with Error instead of Exception. The standard Python documentation also has a section on User Defined Exceptions that you might want to read.

|

In some situations you might want to group many possible sources of internal errors into a single exception with a clear message. For example, you might want to write a library module that throws its own exception to hide the implementation details, i.e. the user of your library shouldn’t have to care which extra libraries you use to get the job done.

Since this will hide the original exception, if you throw your own exception, make sure that it contains every bit of information from the originally caught exception. You’ll be grateful for that when you read the log files that are send to you for debugging.

The example below is taken from the actual source code. This code catches all kinds of exceptions that can be raised when connecting to a hardware device over a TCP socket. The caller is mainly interested of course if the connection could be established or not, but we always include the information from the original exception with the raise..from clause.

def connect(self):

# Sanity checks

if self.is_connection_open:

raise PMACException("Socket is already open")

if self.hostname in (None, ""):

raise PMACException("ERROR: hostname not initialized")

if self.port in (None, 0):

raise PMACException("ERROR: port number not initialized")

# Create a new socket instance

try:

self.sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.sock.setblocking(1)

self.sock.settimeout(3)

except socket.error as e_socket:

raise PMACException("ERROR: Failed to create socket.") from e_socket

# Attempt to establish a connection to the remote host

try:

logger.debug(f'Connecting a socket to host "{self.hostname}" using port {self.port}')

self.sock.connect((self.hostname, self.port))

except ConnectionRefusedError as e_cr:

raise PMACException(f"ERROR: Connection refused to {self.hostname}") from e_cr

except socket.gaierror as e_gai:

raise PMACException(f"ERROR: socket address info error for {self.hostname}") from e_gai

except socket.herror as e_h:

raise PMACException(f"ERROR: socket host address error for {self.hostname}") from e_h

except socket.timeout as e_timeout:

raise PMACException(f"ERROR: socket timeout error for {self.hostname}") from e_timeout

except OSError as e_ose:

raise PMACException(f"ERROR: OSError caught ({e_ose}).") from e_ose

self.is_connection_open = True3.9. When should I use Assertions?

Use assertions only to check for invariants. Assertions are meant for development and should not replace checking conditions or catching exceptions which are meant for production. A good guideline to use assert statements is when they are triggering a bug in your code. When your code assumes something and acts upon the assumption, it’s recommended to protect this assumption with an assert. This assert failing means your assumption isn’t correct, which means your code isn’t correct.

def _load_register_map(self):

# This method shall only be called when self._name is 'N-FEE' or 'F-FEE'.

assert self._name in ('N-FEE', 'F-FEE')Another example is:

# If this assertion fails, there is a flaw in the algorithm above

assert tot_n_args == n_args + n_kwargs, (

f"Total number of arguments ({tot_n_args}) doesn't match "

f"# args ({n_args}) + # kwargs ({n_kwargs})"

)

Remember also that running Python with the -O option will remove or disable assertions. Therefore, never put expressions from your normal code flow in an assertion. They will not be executed when the optimizer is used and your code will break gracefully.

|

3.10. What are Errors?

This is a naming convention thing… names of user defined sub-classes of Exception should end with Error.

3.11. Cascading Exceptions

TBW

3.12. Logging Exceptions

Generally, you should not log exceptions at lower levels, but instead throw exceptions and rely on some top level code to do the logging. Otherwise, you’ll end up with the same exception logged multiple times at different layers in your application.

3.13. Resources

Some of the explanations were taken shamelessly from the following resources:

4. Writing docstrings

For this project we use the Google format for docstrings. Below, you can find a quick example of the docstring for the humanize_bytes() function in the egse.bits module.

def humanize_bytes(n: int, base: Union[int, str] = 2, precision: int = 3) -> str:

"""

Represents the size `n` in human readable form, i.e. as byte, KiB, MiB, GiB, ...

Please note that, by default, I use the IEC standard (International Engineering Consortium)

which is in `base=2` (binary), i.e. 1024 bytes = 1.0 KiB. If you need SI units (International

System of Units), you need to specify `base=10` (decimal), i.e. 1000 bytes = 1.0 kB.

Args:

n (int): number of byte

base (int, str): binary (2) or decimal (10)

precision (int): the number of decimal places [default=3]

Returns:

a human readable size, like 512 byte or 2.300 TiB.

Raises:

ValueError: when base is different from 2 (binary) or 10 (decimal).

Examples:

>>> assert humanize_bytes(55) == "55 bytes"

>>> assert humanize_bytes(1024) == "1.000 KiB"

>>> assert humanize_bytes(1000, base=10) == "1.000 kB"

>>> assert humanize_bytes(1000000000) == '953.674 MiB'

>>> assert humanize_bytes(1000000000, base=10) == '1.000 GB'

>>> assert humanize_bytes(1073741824) == '1.000 GiB'

>>> assert humanize_bytes(1024**5 - 1, precision=0) == '1024 TiB'

"""

The first line shall be a short summary of the functionality ended by a period or question mark. The rest, more detailed information, shall go after a blank line that separates it from the summary line.

You can use the following sections:

-

Args: a list of the parameter names with a short description and possibly defaults values.

-

Returns: or Yields: describe the returns value(s) their content and type. This section can be ommitted when the docstring starts with the word 'Returns' or 'Yields", or when the function doesn’t return anything (None).

-

Raises: a list of the Exceptions that might be raised by this function.

-

Examples: provide some simple examples and their possible outcome.

4.1. What needs to go into the docstring and what not?

The docstring shall contain all information that the user of the function, method, class or module needs to use it. The user in this case can be a developer or a normal user of a Python function. Try to adapt the type and detail of the information in the docstring accordingly.

Do not describe how the functionality is implemented, instead describe what functionality is provided and how it can be used.

4.2. Where will the docstring show up?

We will provide an API documentation that contains all docstrings of all classes, functions, methods and modules that are part of the CGSE. If you are using a Python IDE, use the available functionality to visualize the docstring information of a function. For PyCharm e.g. this is the 'F1' key when the cursor is on the function name.

If you are in the IPython REPL, you can type the function name followed by q question mark:

In [2]: humanize_bytes?

Signature: humanize_bytes(n: int, base: Union[int, str] = 2, precision: int = 3) -> str

Docstring:

Represents the size `n` in human readable form, i.e. as byte, KiB, MiB, GiB, ...

Please note that, by default, I use the IEC standard (International Engineering Consortium)

which is in `base=2` (binary), i.e. 1024 bytes = 1.0 KiB. If you need SI units (International

System of Units), you need to specify `base=10` (decimal), i.e. 1000 bytes = 1.0 kB.

Args:

n (int): number of byte

base (int, str): binary (2) or decimal (10)

precision (int): the number of decimal places [default=3]

Returns:

a human readable size, like 512 byte or 2.300 TiB

Raises:

ValueError when base is different from 2 (binary) or 10 (decimal).

Examples:

assert humanize_bytes(55) == "55 bytes"

assert humanize_bytes(1024) == "1.000 KiB"

assert humanize_bytes(1000, base=10) == "1.000 kB"

assert humanize_bytes(1000000000) == '953.674 MiB'

assert humanize_bytes(1000000000, base=10) == '1.000 GB'

assert humanize_bytes(1073741824) == '1.000 GiB'

assert humanize_bytes(1024**5 - 1, precision=0) == '1024 TiB'

File: ~/Documents/PyCharmProjects/plato-common-egse/src/egse/bits.py

Type: function

In [3]:

Part II — Core Concepts

5. Core Services

What are considered core services?

The core services are provided by five control servers that run on the egse-server machine: the log manager, the configuration manager, the storage manager, the process manager, and the synoptics manager. Each of these services is installed as a systemd service on the operational machine. That means they are monitored by the system and when one of these processes crashes, it is automatically restarted. As a developer, you can start the core services on your local machine or laptop with the invoke command from a terminal.

$ cd ~/git/plato-common-egse $ invoke start-core-egse

The services are described in full detail in the following sections.

5.1. The Log Manager

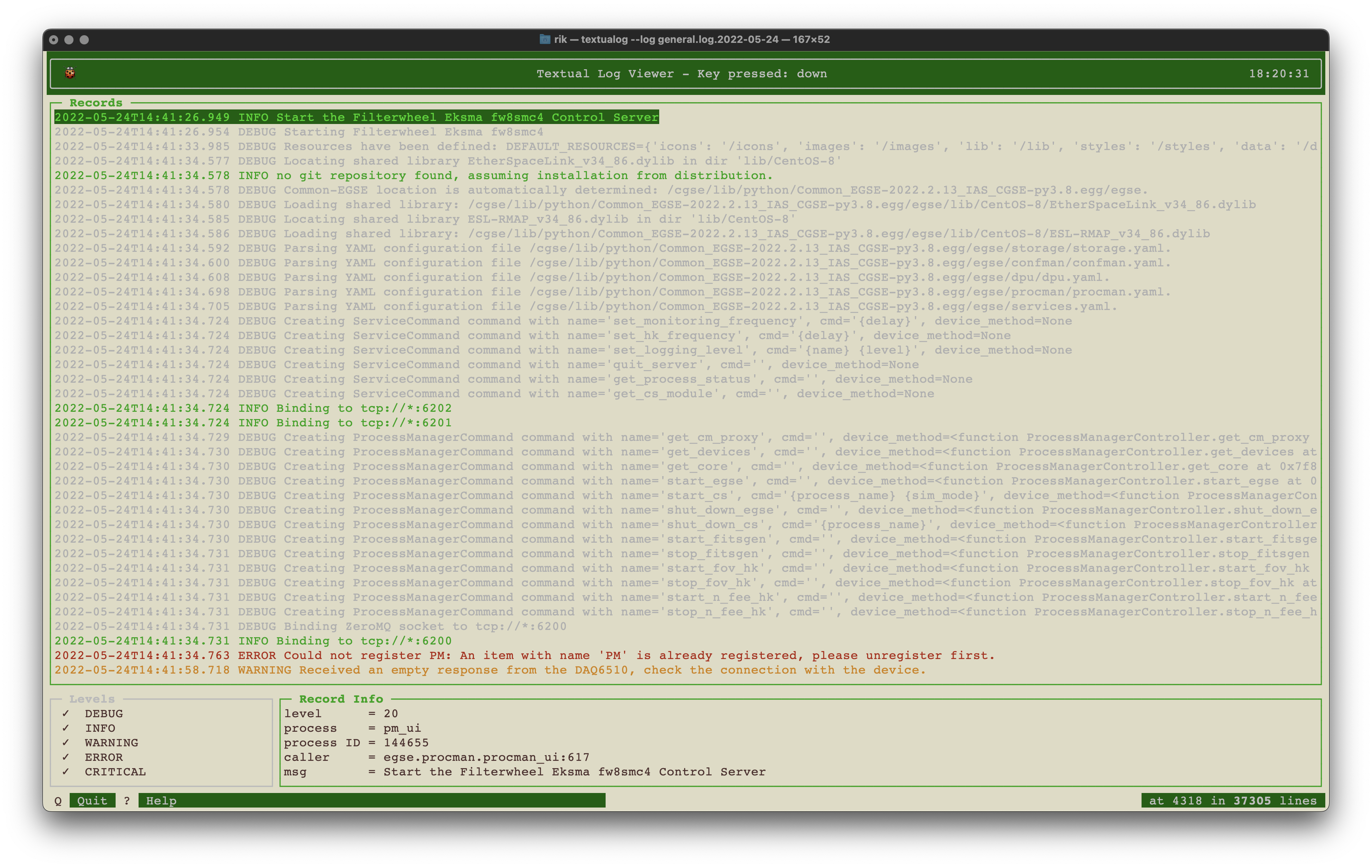

TBW

-

local logging using the standard logging module

-

log messages sent to the log manager log_cs via ZeroMQ

-

why am I not seeing some log messages in my terminal or Python console? → only level INFO and higher

-

should I configure the logger, how?

-

Cutelog and Textualog → user manual?

5.2. The Configuration Manager

The configuration manager is a core service…

-

The GlobalState

-

The Setup

-

The Observation concept

5.3. The Storage Manager

The storage manager is responsible for storing the following data:

-

all housekeeping from the devices that are connected by a control server

-

all housekeeping and CCD data that is transferred from the N-FEE and the F-FEE during camera tests.

-

Origin = defined in the ICD, include a list of predefined origin strings

-

Persistence: CSV / HDF5 / FITS / TXT / SQLite

5.3.1. File Naming

-

see also the ICD

5.3.2. File Formats

-

see also the ICD

5.4. The Data Dumper

We have some problems with performance in reading out the N-FEE when we request both sides of a CCD. The reason for that is probably a series of events, such as waiting for a busy storage manager, garbage collection, data processing, etc. When starting to develop the code for the fast camera, we want to avoid these performance problems as much as possible and we made a number of decisions that are explained below.

-

The DPU Processor should not have to wait for confirmation of the storage manager when saving data. So, we should change from a REQ-REP protocol to a PUSH-PULL protocol.

-

The DPU Processor shall do less processing of information before sending messages to other components. Actually, the process that reads out the spacewire data packets from the F-FEE shall be as simple as possible and delegate all possible tasks to other processes.

-

Communication between processes can be optimised using inter-process communication (IPC) instead of Transmission Control Protocol (TCP).

So, we started out designing the new F-DPU Processes.

There are two main problems that we identified with the Data Storage. (1) The Storage Manager forms a bottleneck handling all data that is generated by the system, not only writing science data to the HDF5 files, but also handling (and duplicating) all housekeeping and auxiliary data that is generated by all the devices used during the test. (2) Using the request-reply (REQ-REP) protocol, the process that generates the data and sends it to the storage manager has to wait for a reply from the Storage Manager with a confirmation of the data being written properly. This might lead to a delay when the Storage manager is very busy writing information to different files and formats.

We decided to keep the functionality of the Storage Manager as it is, don’t touch it since it is still used for all N-CAM activities. In addition, we will build a new process that will only handle the F-FEE HDF5 data from the F-DPU Processor. That new process we call the Data Dumper (data_dump). All data that we retrieve from the F-FEE will be sent from the F-DPU Processor to the data dumper using a PUSH-PULL protocol. This ZeroMQ message protocol can push messages to multiple workers, but we will only connect with one worker, i.e. the data dumper.

Our seconds design point above says the F-DPU Processor shall do as few processing as possible and delegate those tasks to other processes. The reason is to make sure the F-DPU Processor can readout the F-FEE data fast enough to avoid any buffer overflow errors. For that reason we put a process in between the F-DPU Processor and the data dumper which will take over the identification of data packets, and processing those packets before they are sent to the data dumper. Communication between the F-DPU Processor and the new data processor is also based on the PUSH-PULL message protocol.

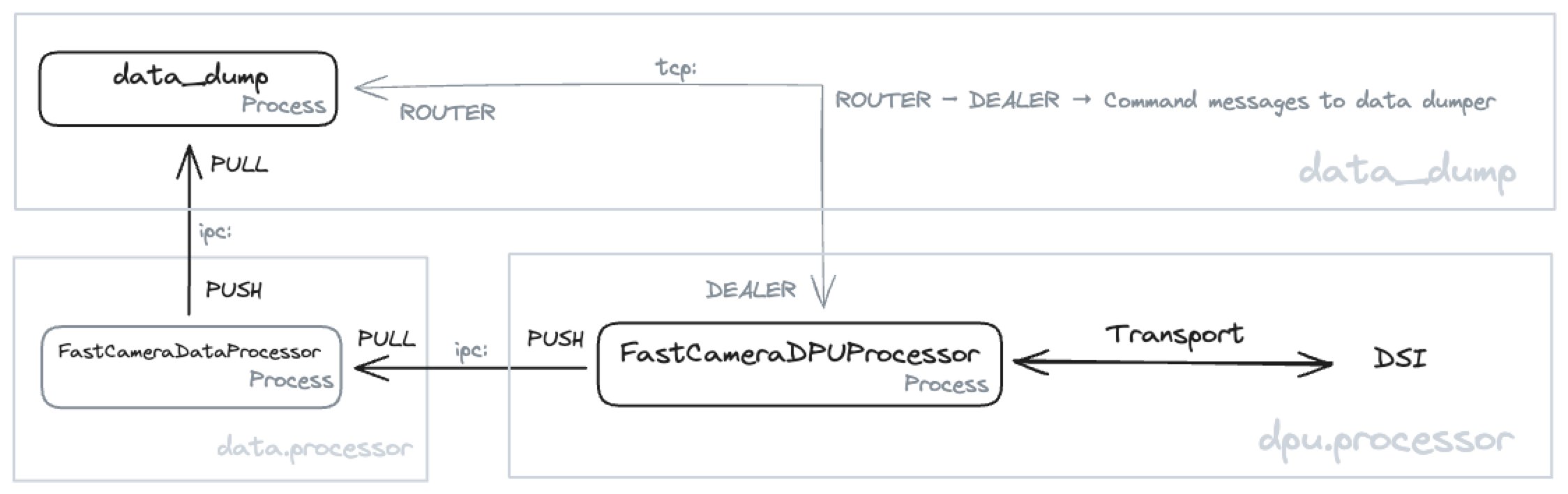

It’s time to put that into a diagram in the hope it will clarify. Don’t worry, we will talk you trough it.

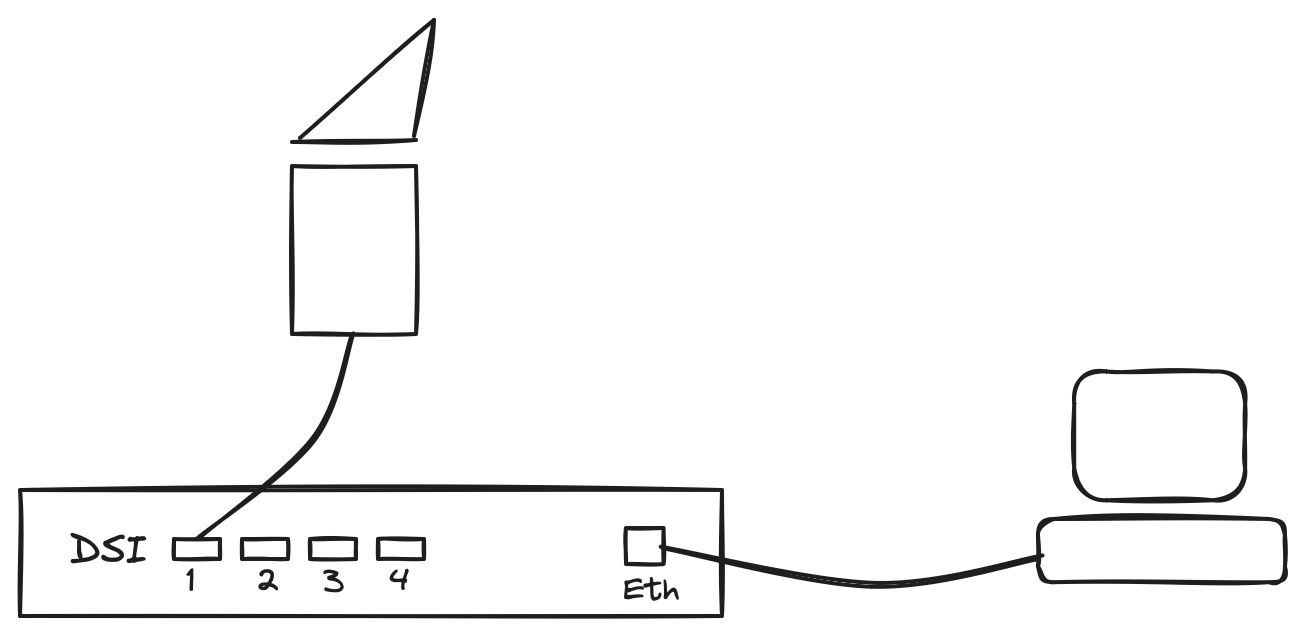

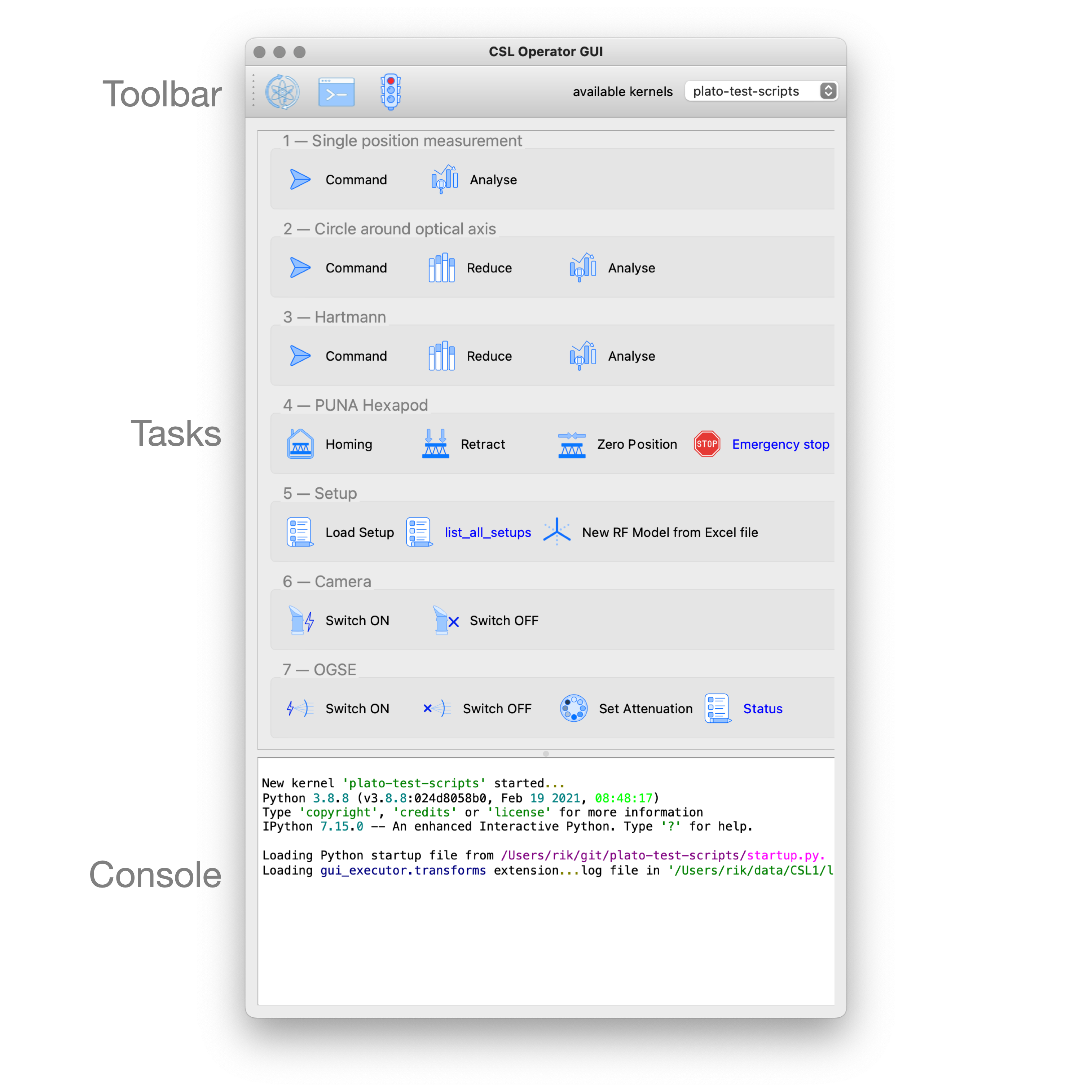

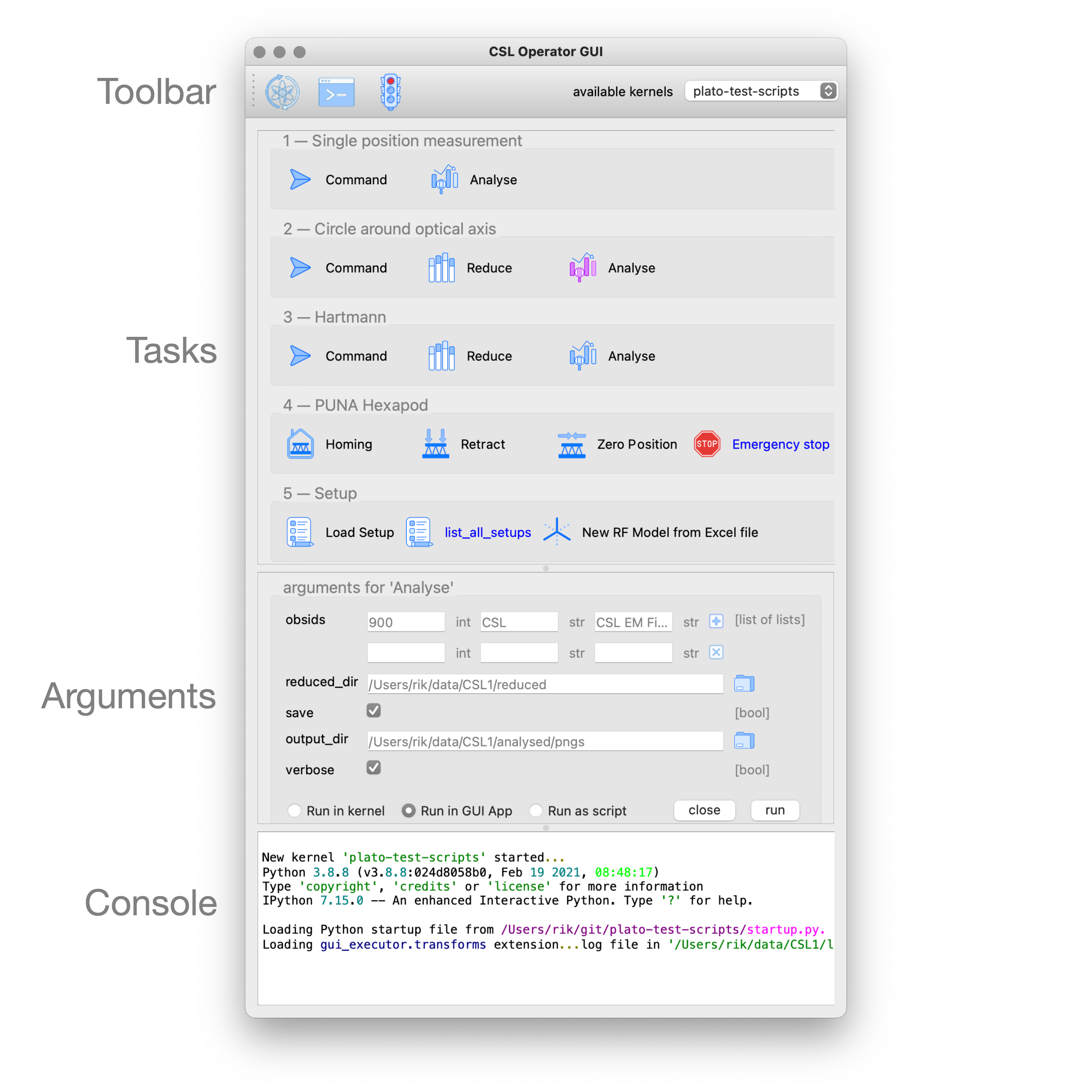

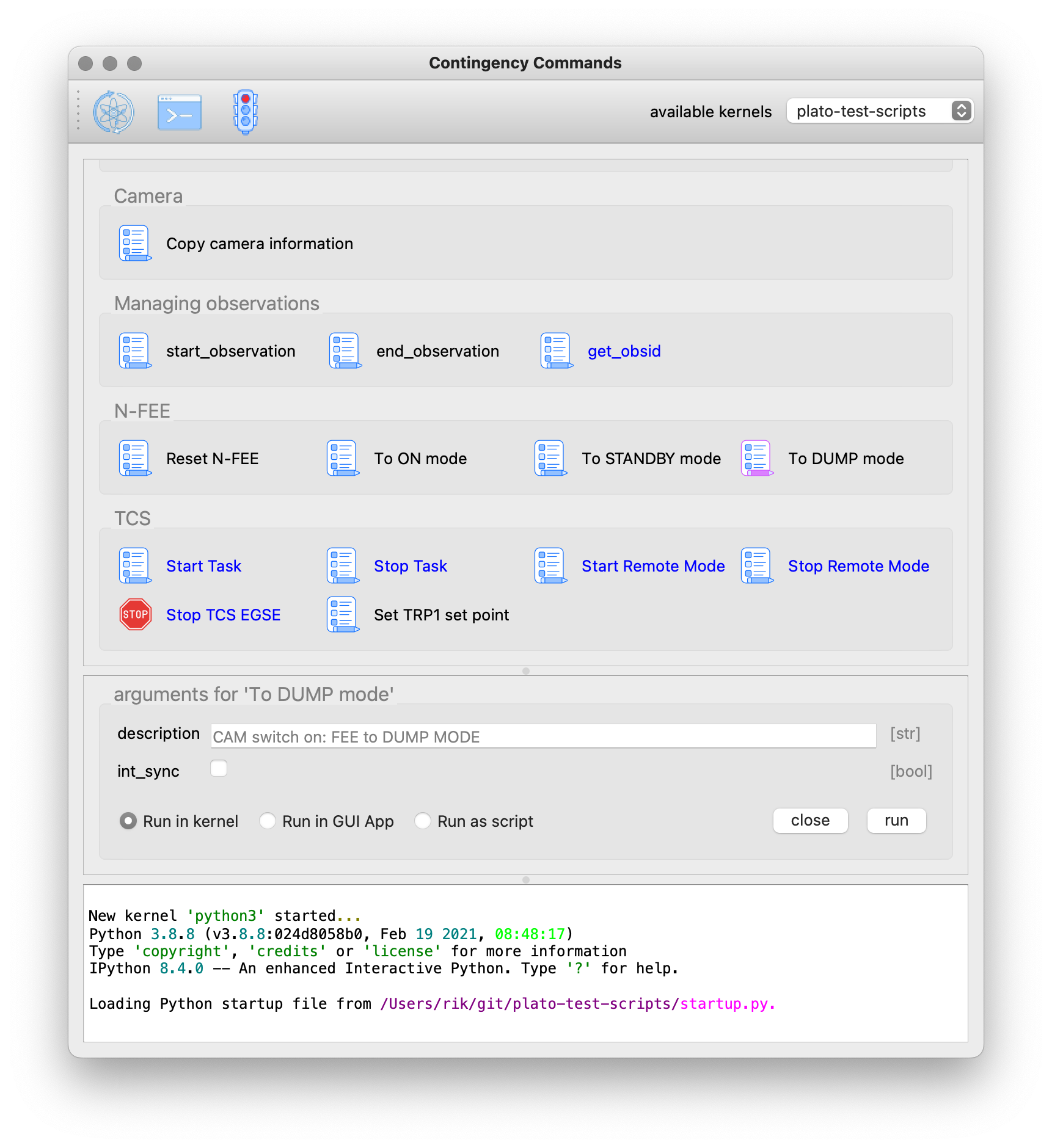

The figure above contains the three processes described above, the F-DPU (FastCameraDPUProcessor), the data processor (FastCameraDataProcessor) and the data dumper (data_dump). The communication between these processes is based on the ZeroMQ PUSH-PULL protocol. Since these processes always run on the CGSE server, we use the faster inter-process communication (IPC) instead of the transmission-control protocol (TCP). We see that data is pushed from the F-DPU to the Data Processor to the data dumper. The F-DPU Processor gets the data from the F-FEE, so there is transport of data and information between the F-DPU and the DSI, which is the SpaceWire interface connected to the F-FEE.

| Please note the Storage Manager still receives and handles all housekeeping and auxiliary data that is sent out by the core and device control servers, and the file generation services. The data_dump process described here will only handle data that is sent by the F-DPU and originates from the F-FEE. |

Of course, the data dumper shall also be able to retrieve commands, mainly from the F-DPU, to synchronise data flow or update configuration settings. This is done through the ZeroMQ DEALER-ROUTER protocol which allows to send commands to the data dumper and request a return value only when needed. For example, if the data dumper needs to update its Setup to the latest version, you wouldn’t require a reply when you send a reload-setup command. On the other hand, if you request status information from the data dumper you do expect a reply.

5.4.1. The data flow

This section explains what kind of data the data dumper receives and how this is handled. All data is stored into an HDF5 file. The format of this file is explained in the CGSE ICD [PLATO-KUL-PL-ICD-0002] section 4.2.

- Timecode

-

The timecode is an integer between 0 and 63 and it is sent by the F-FEE immediately after the reception of a synchronisation pulse. The timecode marks the start of a readout cycle. So, when the data dumper receives a timecode, it closes its current HDF5 file and creates a new HDF5 file. The HDF5 files are created in the

daily/YYYYMMDDfolder at the location given by thePLATO_DATA_STORAGE_LOCATIONenvironment variable. An example name for an HDF5 file that was created on our system that was connected to the EM in Leuven:/data/KUL/daily/20241007/20241007_KUL_F-FEE_SPW_00042.hdf5.Remember, each timecode creates a new HDF5 file. That is every 2.5 seconds.

- Housekeeping Packets

-

The data dumper receives housekeeping packets for the DEB and the active AEBs. This housekeeping is only retrieved when the F-FEE is actually sending out image data. The housekeeping is stored in the same group as its image data (see further). The dataset names are

hk_aebandhk_deb. - Housekeeping Data

-

The first thing the F-DPU Processor does when it receives a timecode, is requesting the register map and the housekeeping data from the DEB and all AEBs. The difference from housekeeping packets that are sent by the F-FEE is that housekeeping data, that is retrieved by RMAP commanding, doesn’t have a packet header. The housekeeping data is saved in the group

hk-dataand contains the datasets 'DEB', 'AEB1', 'AEB3', 'AEB3', and 'AEB4' and you should find this in every single HDF5 file. - Register Map

-

The register map, which is the configuration memory area of the F-FEE, is retrieved from the F-FEE by the F-DPU using a few RMAP memory read requests. The register map is stored in the HDF5 file in a dataset named 'register'. Each HDF5 file shall have this dataset, and it contains the configuration as was commanded and as it will be active starting on the next timecode/HDF5 file.

- Commands

-

The data dumper also retrieves all commands that have been send to the F-FEE with their arguments. This information is stored in the group

commands. The first six commands will always becommand_sync_register_map(),command_deb_read_hk(), andcommand_aeb_read_hk('AEB1')for each AEB. - CCD Image data

-

When the F-FEE is configured in science mode (DEB=FULL_IMAGE, AEBx=IMAGE and DTC_IN_MODE set accordingly), the data dumper will receive all image data as SpaceWire data packets, each containing the readouts of one row of the CCD. All these packets are stored in the group data which is a subgroup of

AEBx-Swhere 'x'=1,2,3,4 and 'S'='E' or 'F'. For example, when image data is sent for the E-side of AEB2, the groupAEB2_Ewill contain a groupdatawith all the image data packets, and also the groupshk_debandhk_aeb(see above). In the default readout mode, there shall be 2260 datasets with image data inside thedatagroup.When image data is available in the HDF5 file, the File group will have the attribute

has_dataset to True, if no image data was sent, this attribute will be False.Also the

datagroup has two attributes attached when image data is available, i.e.ccd_sideand theoverscan_linesOn the F-FEE EM we suffered from buffer overflow errors when receiving image data, resulting in corrupt data packets being sent to the data dumper. These packets are stored in the group

spw-data. What we saw for the EM is that these corrupt packets were sometime longer, sometimes shorter than the valid data packets, but the amount of packets matched the amount of missing packets in thedatagroup. - Observation ID

-

When an observation is started, the OBSID will be sent to the data dumper. The OBSID will be saved in the

obsidgroup.

5.4.2. Commanding the data dumper

The data dumper listens to commands coming in on the DATA_DUMPER.COMMANDING_PORT. A number of the available commands are also provided as terminal commands. That is described for each of the commands below.

The following commands are currently recognised:

- HELO

-

This command is used to register a process that wants to communicate with the data dumper. The data dumper keeps a registry of all registered processes. The command expects the name of the process that registers. A reply is sent on request.

- BYE

-

This command is used to unregister from the data dumper. No reply is sent.

- LOCATION

-

This command will set the new location for the HDF5 files after checking if the location exists and is writable. An acknowledgment will be sent on request.

The corresponding terminal command:

data_dump set-location <fully qualified folder name> - RELOAD-SETUP

-

This command is used to instruct the data dumper to reload the latest Setup from the configuration manager. An acknowledgment will be sent on request.

The corresponding terminal command:

data_dump reload-setup - STATUS

-

This command requests status information from the data dumper. The reply string will look like this:

Data Dumper: Version : 0.4.0 Hostname : 10.33.179.160 Commanding port : 30305 Monitoring port : 30304 Data dump port : 30104 Data location : /data/KUL/daily/20241008 Site ID : KUL Setup loaded : 00035 Scheduler : ['Scheduler.set_new_location → 2024-10-09 00:02:00+00:00'] Dealers : DATA-DUMP-STATUS, F-DPU

The corresponding terminal command is:

data_dump status - QUIT

-

This command will terminate the data dumper process. All open files and connections will be closed and the scheduler will be stopped. This command will only be accepted if it is sent by a registered process. No reply is sent.

5.4.3. Start and stop the data_dump

The data dumper is made part of the core services. That means it will show up in the Process Manager GUI in the section on the core services. It also means that, for operational systems that are working with the F-CAM, the data_dump process will be started and maintained by systemd, i.e. the process will automatically start at system startup and restarted when quit or crashed. No need to worry about it 🙂.

If you want to check if the data dumper is running and properly configured, use the status command:

$ data_dump status Data Dumper: Version : 0.4.0 Hostname : 10.33.179.160 Commanding port : 30305 Monitoring port : 30304 Data dump port : 30104 Data location : /data/KUL/daily/20241008 Site ID : KUL Setup loaded : 00035 Scheduler : ['Scheduler.set_new_location → 2024-10-09 00:02:00+00:00'] (1) Dealers : DATA-DUMP-STATUS, F-DPU (2)

| 1 | The scheduler takes care of setting the correct location for the next day. This entry shows the function that will be called at a given date/time. |

| 2 | The dealers are the processes that are registered to the data dumper. |

For developers who run their own core services on their development machine, the data_dump process is started and stopped together with all other core services using the invoke start-core-egse and invoke stop-core-services commands.

5.5. The Process Manager

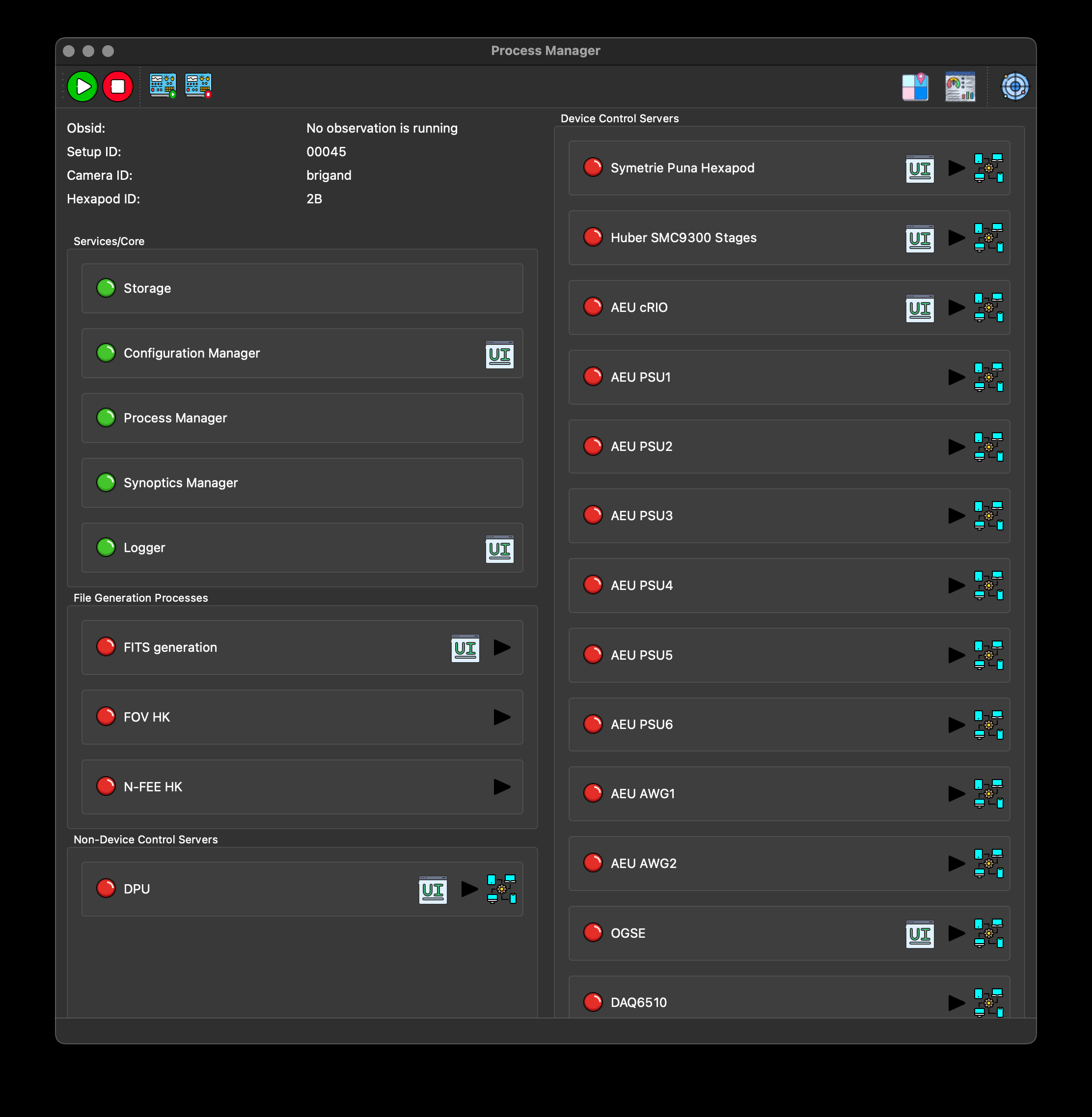

The job of the process manager is to keep track and monitor the execution of running processes during the Camera Tests.

There are known processes that should be running all the time, i.e. the configuration manager, and the Storage Manager. Then, there are device control servers that are dependent on the Site and the Setup at that site. The process manager needs to know which device control servers are available and how to contact them. That information is available in the configuration manager. We need to decide how the interface between the PM and the CM looks like and what information is exchanged in which format.

5.6. The Synoptics Manager

Synoptics = in a form of a summary or synopsis; taking or involving a comprehensive mental view. According to the Oxford Dictionary.

-

what is this?

-

Which parameters are Synoptic?

-

From the device to the Grafana screen, what is the data flow, where are the name changes, where are the calibrations….

In all involved test facilities, the EGSE is used to perform the same set of basic operations: monitoring temperatures, changing the intensity of the source and point it somewhere, acquiring images, etc. However, the devices that are used to perform these tasks are not everywhere the same (e.g. the OGSE with its lamp and filterwheels, the DAQs for temperature acquisition, etc.).

Each (device) control server has a dedicated CSV file in which the housekeeping information is stored and often the name of the parameters indicates the test facility at which they were acquired.

It is not inconvenient if user need to memorise the HK names for all test facilities, it also makes the test and analysis scripts more complex.

The Synoptics Manager stores this information in one centralised location (the synoptics file) with generic parameter names.

5.6.1. Synoptical Parameters

The housekeeping parameters that are stored in the synoptics are:

-

Calibrated temperatures, acquired by the FEE, TCS, and facility DAQs;

-

OGSE information (source intensity, measured power, whether the lamp and/or the laser are on);

-

Source position, both actual and commanded, as field angles (θ,φ).

5.7. The Telemetry (TM) Dictionary

The tm-dicionary.csv file (further referred to as the "telemetry ™ dictionary") provides an overview of all housekeeping (HK) and metrics parameters in the EGSE system. It is used:

-

By the

get_housekeepingfunction (inegse.hk) to know in which file the values of the requested HK parameter should be looked for; -

To create a translation table to convert — in the

get_housekeepingfunction of the device protocols — the original names from the device itself to the EGSE-conform name (see further); -

For the HK that should be included in the synoptics: to create a translation table to convert the original device-specific (but EGSE-conform) names to the corresponding synoptical name in the Synoptics Manager (in

egse.synoptics).

5.7.1. The File’s Content

For each device we need to add all HK parameters to the TM dictionary. For each of these parameters you need to add one line with the following information (in the designated columns):

| Column name | Expected content |

|---|---|

TM source |

Arbitrary (but clear) name for the device. Ideally this name is short but clear enough for outsiders to understand what the device/process is for. |

Storage mnemonic |

Storage mnemonic of the device. This will show up in the filename of the device HK file and can be found in the settings file ( |

CAM EGSE mnemonic |

EGSE-conform parameter name (see next Sect.) for the parameter. Note that the same name should be used for the HK parameter and the corresponding metrics. |

Original name in EGSE |

In the |

Name of corresponding timestamp |

In the device HK files, one of the columns holds the timestamp for the considered HK parameter. The name of that timestamp column should go in this column of the TM dictionary. |

Origin of synoptics at CSL |

Should only be filled for the entries in the TM dictionary for the Synoptics Manager. This is the original EGSE-conform name of the synoptical parameter in the CSL-specific HK file comprising this HK parameter. Leave empty for all other devices! |

Origin of synoptics at SRON |

Should only be filled for the entries in the TM dictionary for the Synoptics Manager. This is the original EGSE-conform name of the synoptical parameter in the SRON-specific HK file comprising this HK parameter. Leave empty for all other devices! |

Origin of synoptics at IAS |

Should only be filled for the entries in the TM dictionary for the Synoptics Manager. This is the original EGSE-conform name of the synoptical parameter in the IAS-specific HK file comprising this HK parameter. Leave empty for all other devices! |

Origin of synoptics at INTA |

Should only be filled for the entries in the TM dictionary for the Synoptics Manager. This is the original EGSE-conform name of the synoptical parameter in the INTA-specific HK file comprising this HK parameter. Leave empty for all other devices! |

Description |

Short description of what the parameter represents. |

MON screen |

Name of the Grafana dashboard in which the parameter can be inspected. |

unit cal1 |

Unit in which the parameter is expressed. Try to be consistent in the use of the names (e.g. Volts, Ampère, Seconds, Degrees, DegCelsius, etc.). |

offset b cal1 |

For raw parameters that can be calibrated with a linear relationship, this column holds the offset |

slope a cal1 |

For raw parameters that can be calibrated with a linear relationship, this column holds the slope |

calibration function |

Not used at the moment. Can be left emtpy. |

MAX nonops |

Maximum non-operational value. Should be expressed in the same unit as the parameter itself. |

MIN nonops |

Minimum non-operational value. Should be expressed in the same unit as the parameter itself. |

MAX ops |

Maximum operational value. Should be expressed in the same unit as the parameter itself. |

MIN ops |

Minimum operational value. Should be expressed in the same unit as the parameter itself. |

Comment |

Any additional comment about the parameter that is interesting enough to be mentioned but not interesting enough for it to be included in the description of the parameter. |

Since the TM dictionary grows longer and longer, the included devices/processes are ordered as follows (so it is easier to find back the telemetry parameters that apply to your TH):

-

Devices/processes that all test houses have in common: AEU, N-FEE, TCS, Synoptics Manager, etc.

-

Devices that are CSL-specific;

-

Devices that are SRON-specific;

-

Devices that are IAS-specific;

-

Devices that are INTA-specific.

5.7.2. EGSE-Conform Parameter Names

The correct (i.e. EGSE-conform) naming of the telemetry should be taken care of in the get_housekeeping method of the device protocols.

Common Parameters

A limited set of devices/processes is shared by (almost) all test houses. Their telemetry should have the following prefix:

| Device/process | Prefix |

|---|---|

Configuration Manager |

CM_ |

AEU (Ancillary Electrical Unit) |

GAEU_ |

N-FEE (Normal Front-End Electronics) |

NFEE_ |

TCS (Thermal Control System) |

GTCS_ |

FOV (source position) |

FOV_ |

Synoptics Manager |

GSYN_ |

TH-Specific Parameters

Some devices are used in only one or two test houses. Their telemetry should have TH-specific prefix:

| TH | Prefix |

|---|---|

CSL |

GCSL_ |

CSL1 |

GCSL1_ |

CSL2 |

GCSL2_ |

SRON |

GSRON_ |

IAS |

GIAS_ |

INTA |

GINTA_ |

5.7.3. Synoptics

The Synoptics Manager groups a pre-defined set of HK values in a single file. It’s not the original EGSE-conform names that are use in the synoptics, but names with the prefix GSYN_. The following information is comprised in the synoptics:

-

Acquired by common devices/processes:

-

Calibrated temperatures from the N-FEE;

-

Calibrated temperatures from the TCS;

-

Source position (commanded + actual).

-

Acquired by TH-specific devices:

-

Calibrated temperatures from the TH DAQs;

-

Information about the OGSE (intensity, lamp and laser status, shutter status, measured power).

For the first type of telemetry parameters, their original EGSE-conform name should be put into the column CAM EGSE mnemonic, as they are not TH-specific.

The second type of telemetry parameters is measured with TH-specific devices. The original TH-specific EGSE-conform name should go in the column Origin of synoptics at ....

5.7.4. Translation Tables

The translation tables that were mentioned in the introduction, can be created by the read_conversion_dict function in egse.hk. It takes the following input parameters:

-

storage_mnemonic: Storage mnemonic of the device/process generating the HK; -

use_site: Boolean indicating whether you want the translation table for the TH-specific telemetry rather than the common telemetry (Falseby default).

To apply the actual translation, you can use the convert_hk_names function from egse.hk, which takes the following input parameters:

-

original_hk: HK dictionary with the original names; -

conversion_dict: Conversion table you got as output from theread_conversion_dictfunction.

5.7.5. Sending HK to Synoptics

When you want to include HK of your devices, you need to take the following actions:

-

Make sure that the TM dictionary is complete (as described above);

-

In the device protocol:

-

At initialisation: establish a connection with the Synoptics Manager:

self.synoptics = SynopticsManagerProxy() -

In

get_housekeeping(both take the dictionary with HK as input):-

For TH-specific HK:

self.synoptics.store_th_synoptics(hk_for_synoptics); -

For common HK:

self.synoptics.store_common_synoptics(hk_for_synoptics).

-

-

Please, do not introduce new synoptics without further discussion!

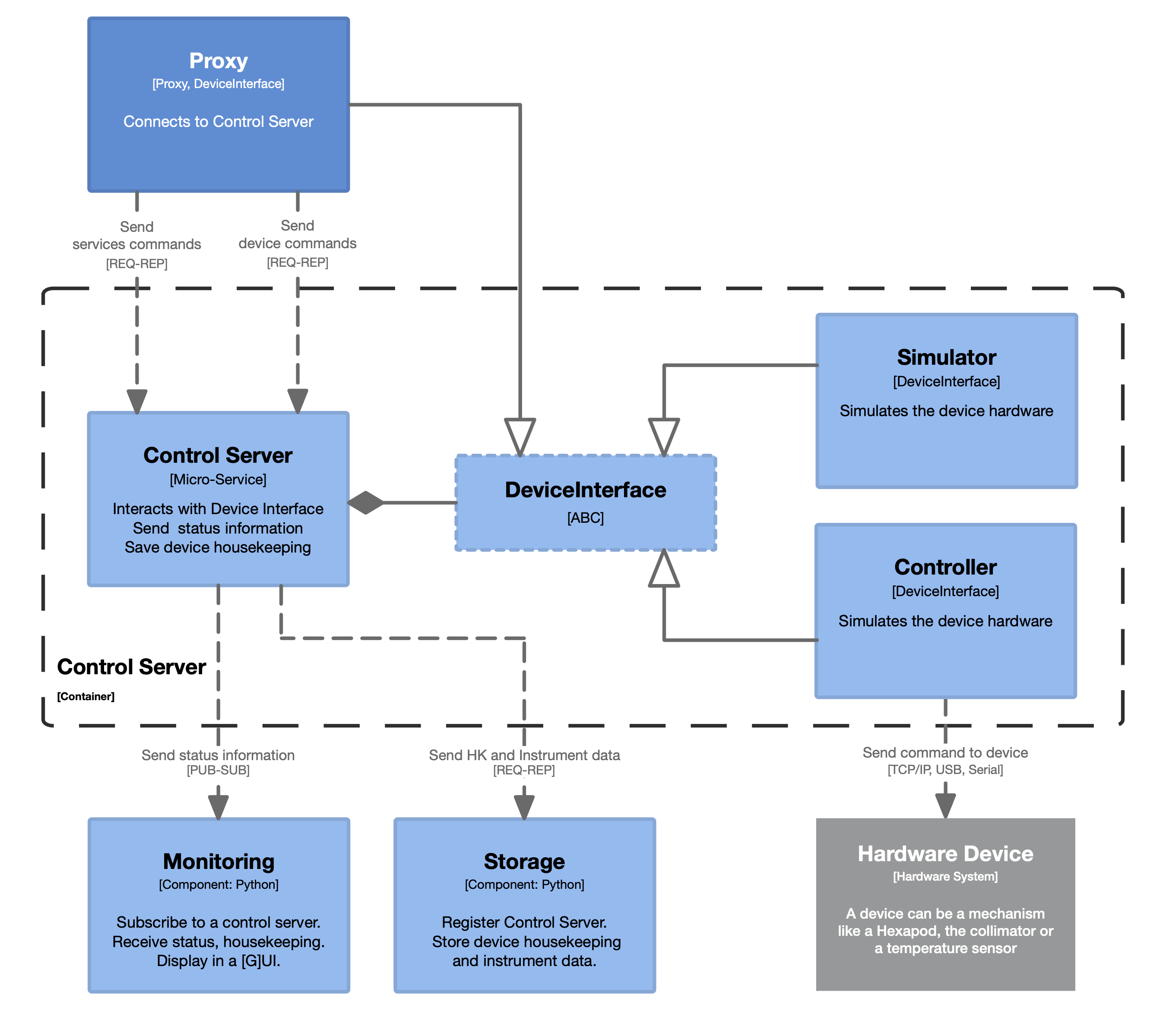

6. The Commanding Concept

-

Proxy – Protocol – Controller

-

Control Servers

-

YAML command files

-

Dynamic commanding

6.1. The Control Server

TODO:

-

Explain what the CS does during startup and what happens when this fails.

-

description of the

control.pymodule

6.1.1. Create multiple control servers for identical devices

This section describes what you can do when you have identical devices, such as two LakeShore Temperature Controllers or multiple power supplies, and need to use them in the same project at the same site. While the control servers are configured in the Settings YAML file with, among other things, IP address and port numbers, they still need to be addressed in the Setup for configuring your equipment at a certain time and location. Specifically, you will need to create the proper Proxy class to communicate with your control server.

The specificity of the equipment used in your lab is defined in the Setup, and from the Setup, you will usually create the Controller or Proxy objects to access and control your equipment. This can be done in two different ways, which are explained in the next two sections.

Directly define the device controller class

In the Setup, you will define your devices as in the excerpt below. This will create a PunaProxy object whenever you request the device from the Setup.

gse:

hexapod:

device: class//egse.hexapod.symetrie.PunaProxySome devices contain more than one controller and need several control servers to manage that device. An example of this type is the AEU Test EGSE. This device contains six power supply units and two analog wave generators. When requesting a Proxy object to access the device, say PSU 2, the PSU identifier (the number '2' in this case) needs to be passed into the __init__() method of the Proxy class. To accomplish this, the Setup for this device contains an additional device argument:

gse:

aeu:

psu2:

device: class//egse.aeu.aeu.PSUProxy

device_args: [2]All the values that are given in the list for the device_args are passed as positional arguments to the __init__() method of the PSUProxy class. When requesting the device from the Setup, you will get a PSUProxy object. The super class was however instantiated with the port number that was defined for PSU 2 in the settings file.

>>> psu = setup.gse.aeu.psu2.device

>>> psu

<egse.aeu.aeu.PSUProxy object at 0x7f83dafc2460>

>>> psu.name

'PSU2'

>>> psu.get_endpoint()

'tcp://localhost:30010'Use a factory to create a device

In the Setup you can, instead of a class// definition, use a factory// definition. A Factory is a class with a specific interface to create the class object that you intended based on a number of arguments that are also specified in the Setup. Let’s first look at an example for the PUNA Hexapod. The following excerpt from a Setup defines the hexapod device as a ControllerFactory. There are also two device arguments defined, a device name and an idenditier.

gse:

hexapod:

device: factory//egse.hexapod.symetrie.ControllerFactory

device_args:

device_name: PUNA Hexapod

device_id: 1ASo, what happens when you access this device from the Setup. Let’s look at the following code snippet from your Python REPL. The Setup has been loaded already in the setup variable. To access the device, use the dot (.) notation.

>>> puna = setup.gse.hexapod.device

>>> puna

<egse.hexapod.symetrie.puna.PunaController object at 0x7f83e039ba60>

>>> setup.gse.hexapod.device_args.device_id = "1B"

>>> puna = setup.gse.hexapod.device

>>> puna

<egse.hexapod.symetrie.punaplus.PunaPlusController object at 0x7f83dab8ed00>When we –with the given Setup– request the hexapod device, we get a PunaController object as you can see on line 3,

when we change the device_id to '1B' and request the hexapod device, we get the PunaPlusController object (line 7). Changing the device_id resulted in a different class created by the Factroy. Any Factory class that is used this way in a Setup shall implement the create(..) method which is defined by the DeviceFactoryInterface class. Our ControllerFactory for the hexapod inherits from this interface and implements the method.

class DeviceFactoryInterface:

def create(self, **kwargs):

...

class ControllerFactory(DeviceFactoryInterface):

"""

A factory class that will create the Controller that matches the given device name

and identifier.

The device name is matched against the string 'puna' or 'zonda'. If the device name

doesn't contain one of these names, a ValueError will be raised. If the device_name

matches against 'puna', the additional `device_id` argument shall also be specified.

"""

def create(self, device_name: str, *, device_id: str = None, **_ignored):

if "puna" in device_name.lower():

from egse.hexapod.symetrie.puna import PunaController

from egse.hexapod.symetrie.punaplus import PunaPlusController

if not device_id.startswith("H_"):

device_id = f"H_{device_id}"

hostname = PUNA_SETTINGS[device_id]["HOSTNAME"]

port = PUNA_SETTINGS[device_id]["PORT"]

controller_type = PUNA_SETTINGS[device_id]["TYPE"]

if controller_type == "ALPHA":

return PunaController(hostname=hostname, port=port)

elif controller_type == "ALPHA_PLUS":

return PunaPlusController(hostname=hostname, port=port)

else:

raise ValueError(

f"Unknown controller_type ({controller_type}) for {device_name} "

f"– {device_id}"

)

elif "zonda" in device_name.lower():

from egse.hexapod.symetrie.zonda import ZondaController

return ZondaController()

else:

raise ValueError(f"Unknown device name: {device_name}")The create(..) method returns a device controller object for either the PUNA Alhpa or Alpha+ Controller, or for the ZONDA Controller. Each of these represent a Symétrie hexapod. The decision for which type of controller to return is first based on the device name, then on settings that depend on the device identifier. Both of these parameters are specified in the Setup (see above) and passed into the create() method of the factory class at the time the user requests the device from the setup.

The TYPE, HOSTNAME, and PORT are loaded from the PUNA_SETTINGS dictionary. These settings are device specific and are defined in the local setting for your site. The PUNA_SETTINGS is a dictionary that represents part of the overall Settings. The factory class uses these device configuration settings to decide which controller object shall be instantiated and what the parameters for the creation shall be.

└── PMAC Controller

├── H_1A

│ ├── TYPE: ALPHA

│ ├── HOSTNAME: XXX.XXX.XXX.X1A

│ └── PORT: 1025

└── H_1B

├── TYPE: ALPHA_PLUS

├── HOSTNAME: XXX.XXX.XXX.X1B

└── PORT: 23

You might think that it would be simpler to specify the device configuration settings also in the Setup and pass all this information to the create method when requesting the device from the Setup. However, this would undermine the specific difference between Settings and Setup and also would invalidate the direct creation of the controller object without the use of a factory class.

6.2. The Proxy Class

The Proxy class automatically tries to connect to its control server. When the control server is up and running, the Proxy loads the device commands from the control server. When the control server is not running, a warning is issued, and the device commands are not loaded. If you then try to call one of the device methods, you will get a NotImplementedError. The ZeroMQ connection initiated by the Proxy will just sit there until the control server comes up, so you can fix the problem with the control server and call the load_commands() method on the Proxy again to load the commands.

6.3. The Protocol Class

The DPU Protocol starts a thread called DPU Processor Thread. This thread is a loop that continuously reads packets from the FEE and writes RMAP commands when there is a command put on the queue. When the control server receives a quit command, this thread also needs to be terminated gracefully since it has a connection and registration with the Storage manager. Therefore, the Protocol class has a quit() method which is called by the control server when it is terminated. The DPU Protocol class implements this quit() method to notify the DPU Processor Thread. Other Protocol implementations usually don’t implement the quit() method.

Another example of a control server that starts a sub-process through its Protocol class in the TCS control server. The control server start the TCS Telemetry process to handle reading housekeeping from the TCS EGSE using a separate port [6667 by default].

6.4. Services

The control servers are the single point access to your equipment and devices. Commanding the device is handled by the Protocol and Controller classes on the server side and by the Proxy class on the client side. In addition to commanding your devices, you would also like to have some control on the behaviour of your device control servers. That is where the Service slass comes into play. The Services provide common commands that act on the control server instead of on the device. These commands are send through a Service Proxy that you can request for each of the control servers. Below is a code snippet asking for the service proxy of the hexapod PUNA and requesting the status of the control server process.

>>> from egse.hexapod.symetrie.puna import PunaProxy

>>> with PunaProxy() as puna, puna.get_service_proxy() as srv:

... print(srv.get_process_status())

{

'timestamp': '2023-03-29T08:00:31.708+0000',

'delay': 1000,

'PID': 39242,

'Up': 486.7373969554901,

'UUID': UUID('a74c0f52-ce06-11ed-a5d1-acde48001122'),

'RSS': 115720192,

'USS': 98033664,

'CPU User': 10.516171776,

'CPU System': 3.300619776,

'CPU count': 6,

'CPU%': 2.9

}